BioTeam’s Backblaze 2.0 Project – 135 Terabytes for $12,000

- Part I – Why you should never build a Backblaze pod

- Part II – Why we built a Backblaze pod

- Part III – Our real-world Backblaze pod costs

- Part IV – Backblaze pod assembly & integration pictures

- Part V – Backblaze Performance (this post)

- Part VI – Backblaze pod software & configuration (future post)

- Part VII – Backblaze pod ongoing impressions (future post)

Initial Performance Data

Why we hate benchmarking

Before we get into the numbers and data lets waste a few bits pontificating on all the ways that benchmarking efforts are soul-destroying and rarely rewarded. It’s a heck of a lot of work, often performed under pressure and after the work is done it turns out to never be enough. You will NEVER make everyone happy and you will ALWAYS upset one particular person or group and they will not be shy about telling you why your results are suspicious, your tests were bad and your competence is questionable.

That is why the only good benchmarks are those done by YOU, using YOUR applications, workflows and data. Everything else is just artificial or a best-guess attempt.

A perfect example of “sensible benchmarks” can be found on the Backblaze blog pages where the authors clearly indicate that the only performance metric they care about is whether or not they can saturate the Gigabit Ethernet NIC that feeds each pod. This is nice, simple and succinct and goes to the heart of their application and business requirements — “can we stuff the pods with data at reasonable rates?” – all other measurements and metrics are just pointless e-wanking from an operational perspective.

For this project we, and our client have similar attitudes. The only performance metric we care about is how well it handles our intended use case.

SPOILER ALERT:

It does. The backblaze 2.0 pod has exceeded expectations when it comes to data movement and throughput. We get near wire-speed performance across a single Gigabit Ethernet link. Performance meets or exceeds other more traditional storage devices used within the organization.

Credit: Anonymous

In the interest of transparency, we are just passing along performance figures measured by the primary user at our client site. We wish we did the work but in this case we get to sit back and just write about the results. Our client still prefers to remain anonymous at this point but hopefully in the future (possibly at the next BioITWorld conference in Boston) we’ll convince them to speak in public about their experiences.

Network Performance Figures

For a single Gigabit Ethernet link the theoretical maximum throughput is about 125 megabytes per second if one does not include the protocol overhead of TCP/IP. Online references suggest that TCP/IP overhead without special tuning or tweaking can be about 8-11%.

This means we should expect to see real world performance slightly under 125 megabytes per second for TCP/IP devices that can saturate Gigabit Ethernet links.

This is what was found:

- Client/server “iperf” performance measured throughput at 939 Mbit/sec or 117 megabytes/sec

- A single NFS client could read from the backblaze server at a sustained rate of 117 megabytes/sec

- A single NFS client could write into the backblaze server at a sustained rate of 90 megabytes/sec

The performance penalty for writing into the device almost certainly comes from the parity overhead of running three separate RAID6 software raid volumes on the storage pod.

Our basic conclusion at this point is that we are happy with performance. With no special tuning or tweaking, the backblaze pod is happily doing it’s thing on a Gigabit Ethernet fabric. The speed of reads and writes is more then adequate for our particular use case and in fact the speed exceeds that of some other devices also currently in use within the organization.

Here is the iperf screenshot:

Backblaze Local Disk IO

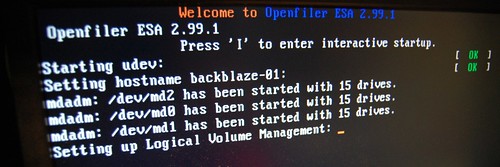

We still need to put up a blog post that describes our software and server configuration in more detail but for this post, the main points about the hardware and software config are:

- 15 drives per SATA controller card

- Three groups of 15-drive units

- Each 15-drive group is configured as Linux Software RAID6

- All three RAID6 LUNs are aggregated into a single 102TB volume via LVM

Local disk performance tests were run using multiple ‘dd’ read and write attempts with the results averaged. Tests were run while the software RAID6 volumes were in known-good state as well as when the software RAID system was busy synchronizing volumes.

The data here is less exciting, it mainly boils down to noting that there is an obvious and easily measurable performance hit observed when the software RAID6 volumes are being synced.

What was observed:

- We can write to local disk at roughly 135 megabytes per second

- We can read from local disk at roughly 160-170 megabytes per second when software raid is syncing

- We can read from local disk at roughly 200 megabytes per second when the array is fully synced

Conclusion

We are happy. It works. The system is working well for the scientific use case(s) that were defined. It’s even handling the use-cases that we can’t speak about in public.

Conclusion, continued …

Performance out of the box is sufficient that we intend no special heroics to squeeze more performance out of the system. Future sysadmin efforts will be focused on testing out how drive replacement can be done most effectively and other efforts aimed at controlling and reducing the overall administrative burden of these types of systems.

Based on the numbers we’ve measured, we think the backblaze could be comfortable with something a little bit larger than a single Gigabit Ethernet link. It may be worthwhile to aggregate the 2nd NIC, install a 10GBE card or possibly experiment with TOE-enabled NICs to see what happens. Not something we plan to do with this pod, this project or this client however as the system is (so far) meeting all expectations.